Dynamically sending messages to and from processors with MPI and mpi4py

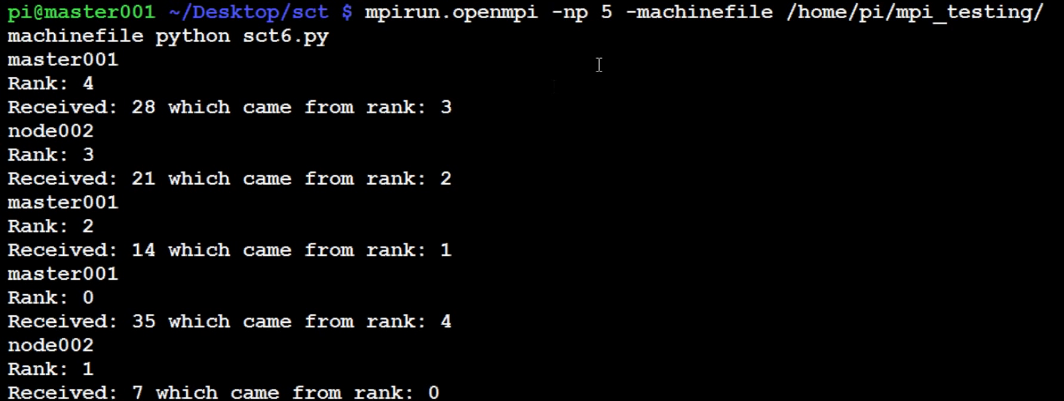

from mpi4py import MPI #import numpy comm = MPI.COMM_WORLD rank=comm.rank size=comm.size name=MPI.Get_processor_name() shared=(rank+1)*5 comm.send(shared,dest=(rank+1)%size) data=comm.recv(source=(rank-1)%size) print name print 'Rank:',rank print 'Recieved:',data,'which came from rank:',(rank-1)%size

Now we're going to talk about dynamically sending and receiving messages. Maybe you are running in a heavily distributed network, with available node counts that change often.

Maybe you just have a large number of nodes, and you don't want to hand-code something for each tiny node. You'll need to create algorithms to choose nodes that can scale with your network.

Here's an example algorithm that will always send to the next node up, and wrap around to the beginning when we reach the largest node number.

mpirun.openmpi -np 5 -machinefile /home/pi/mpi_testing/machinefile python ~/Desktop/sct/sct6.py

-

Build a Supercomputer with Raspberry Pis

-

Intro

-

Supplies

-

Installing Operating System

-

Downloading and installing MPI

-

Testing Supercomputer

-

MPI with MPI4py Introduction

-

Installing mpi4py for use with Python and MPI

-

First basic MPI script with mpi4py

-

Using conditional, Python, statements alongside MPI commands example

-

Getting network processor size with the size command

-

Sending and Receiving data using send and recv commands with MPI

-

Dynamically sending messages to and from processors with MPI and mpi4py

-

Message and data tagging for send and recv MPI commands tutorial

-

MPI broadcasting tutorial with Python, mpi4py, and bcast

-

Scatter with MPI tutorial with mpi4py

-

Gather command with MPI, mpi4py, and Python