Cryptocurrency-predicting RNN Model - Deep Learning basics with Python, TensorFlow and Keras p.11

Welcome to the next tutorial covering deep learning with Python, Tensorflow, and Keras. We've been working on a cryptocurrency price movement prediction recurrent neural network, focusing mainly on the pre-processing that we've got to do. In this tutorial, we're going to be finishing up by building our model and training it.

Code up to this point:

import numpy as np

import pandas as pd

import random

from collections import deque

from sklearn import preprocessing

SEQ_LEN = 60 # how long of a preceeding sequence to collect for RNN

FUTURE_PERIOD_PREDICT = 3 # how far into the future are we trying to predict?

RATIO_TO_PREDICT = "LTC-USD"

def classify(current, future):

if float(future) > float(current): # if the future price is higher than the current, that's a buy, or a 1

return 1

else: # otherwise... it's a 0!

return 0

def preprocess_df(df):

df = df.drop("future", 1) # don't need this anymore.

for col in df.columns: # go through all of the columns

if col != "target": # normalize all ... except for the target itself!

df[col] = df[col].pct_change() # pct change "normalizes" the different currencies (each crypto coin has vastly diff values, we're really more interested in the other coin's movements)

df.dropna(inplace=True) # remove the nas created by pct_change

df[col] = preprocessing.scale(df[col].values) # scale between 0 and 1.

df.dropna(inplace=True) # cleanup again... jic.

sequential_data = [] # this is a list that will CONTAIN the sequences

prev_days = deque(maxlen=SEQ_LEN) # These will be our actual sequences. They are made with deque, which keeps the maximum length by popping out older values as new ones come in

for i in df.values: # iterate over the values

prev_days.append([n for n in i[:-1]]) # store all but the target

if len(prev_days) == SEQ_LEN: # make sure we have 60 sequences!

sequential_data.append([np.array(prev_days), i[-1]]) # append those bad boys!

random.shuffle(sequential_data) # shuffle for good measure.

buys = [] # list that will store our buy sequences and targets

sells = [] # list that will store our sell sequences and targets

for seq, target in sequential_data: # iterate over the sequential data

if target == 0: # if it's a "not buy"

sells.append([seq, target]) # append to sells list

elif target == 1: # otherwise if the target is a 1...

buys.append([seq, target]) # it's a buy!

random.shuffle(buys) # shuffle the buys

random.shuffle(sells) # shuffle the sells!

lower = min(len(buys), len(sells)) # what's the shorter length?

buys = buys[:lower] # make sure both lists are only up to the shortest length.

sells = sells[:lower] # make sure both lists are only up to the shortest length.

sequential_data = buys+sells # add them together

random.shuffle(sequential_data) # another shuffle, so the model doesn't get confused with all 1 class then the other.

X = []

y = []

for seq, target in sequential_data: # going over our new sequential data

X.append(seq) # X is the sequences

y.append(target) # y is the targets/labels (buys vs sell/notbuy)

return np.array(X), y # return X and y...and make X a numpy array!

main_df = pd.DataFrame() # begin empty

ratios = ["BTC-USD", "LTC-USD", "BCH-USD", "ETH-USD"] # the 4 ratios we want to consider

for ratio in ratios: # begin iteration

ratio = ratio.split('.csv')[0] # split away the ticker from the file-name

dataset = f'training_datas/{ratio}.csv' # get the full path to the file.

df = pd.read_csv(dataset, names=['time', 'low', 'high', 'open', 'close', 'volume']) # read in specific file

# rename volume and close to include the ticker so we can still which close/volume is which:

df.rename(columns={"close": f"{ratio}_close", "volume": f"{ratio}_volume"}, inplace=True)

df.set_index("time", inplace=True) # set time as index so we can join them on this shared time

df = df[[f"{ratio}_close", f"{ratio}_volume"]] # ignore the other columns besides price and volume

if len(main_df)==0: # if the dataframe is empty

main_df = df # then it's just the current df

else: # otherwise, join this data to the main one

main_df = main_df.join(df)

main_df.fillna(method="ffill", inplace=True) # if there are gaps in data, use previously known values

main_df.dropna(inplace=True)

#print(main_df.head()) # how did we do??

main_df['future'] = main_df[f'{RATIO_TO_PREDICT}_close'].shift(-FUTURE_PERIOD_PREDICT)

main_df['target'] = list(map(classify, main_df[f'{RATIO_TO_PREDICT}_close'], main_df['future']))

main_df.dropna(inplace=True)

## here, split away some slice of the future data from the main main_df.

times = sorted(main_df.index.values)

last_5pct = sorted(main_df.index.values)[-int(0.05*len(times))]

validation_main_df = main_df[(main_df.index >= last_5pct)]

main_df = main_df[(main_df.index < last_5pct)]

train_x, train_y = preprocess_df(main_df)

validation_x, validation_y = preprocess_df(validation_main_df)

print(f"train data: {len(train_x)} validation: {len(validation_x)}")

print(f"Dont buys: {train_y.count(0)}, buys: {train_y.count(1)}")

print(f"VALIDATION Dont buys: {validation_y.count(0)}, buys: {validation_y.count(1)}")

Let's make a few more constants:

import time

EPOCHS = 10 # how many passes through our data

BATCH_SIZE = 64 # how many batches? Try smaller batch if you're getting OOM (out of memory) errors.

NAME = f"{SEQ_LEN}-SEQ-{FUTURE_PERIOD_PREDICT}-PRED-{int(time.time())}" # a unique name for the model

Next let's build the model, first we need some imports:

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, LSTM, CuDNNLSTM, BatchNormalization

from tensorflow.keras.callbacks import TensorBoard

from tensorflow.keras.callbacks import ModelCheckpoint

Now for the model. I tried a few things like 2 vs 3 layers, 64 vs 128 nodes, and found the following to begin to work:

model = Sequential()

model.add(CuDNNLSTM(128, input_shape=(train_x.shape[1:]), return_sequences=True))

model.add(Dropout(0.2))

model.add(BatchNormalization()) #normalizes activation outputs, same reason you want to normalize your input data.

model.add(CuDNNLSTM(128, return_sequences=True))

model.add(Dropout(0.1))

model.add(BatchNormalization())

model.add(CuDNNLSTM(128))

model.add(Dropout(0.2))

model.add(BatchNormalization())

model.add(Dense(32, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(2, activation='softmax'))

Model compile settings:

opt = tf.keras.optimizers.Adam(lr=0.001, decay=1e-6)

# Compile model

model.compile(

loss='sparse_categorical_crossentropy',

optimizer=opt,

metrics=['accuracy']

)

TensorBoard callback:

tensorboard = TensorBoard(log_dir="logs/{}".format(NAME))

Next, let's check out the ModelCheckpoint callback

filepath = "RNN_Final-{epoch:02d}-{val_acc:.3f}" # unique file name that will include the epoch and the validation acc for that epoch

checkpoint = ModelCheckpoint("models/{}.model".format(filepath, monitor='val_acc', verbose=1, save_best_only=True, mode='max')) # saves only the best ones

Train the model:

# Train model

history = model.fit(

train_x, train_y,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

validation_data=(validation_x, validation_y),

callbacks=[tensorboard, checkpoint],

)

Run evaluations:

# Score model

score = model.evaluate(validation_x, validation_y, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

# Save model

model.save("models/{}".format(NAME))

Full code up to this point and running it:

import pandas as pd

from collections import deque

import random

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, LSTM, CuDNNLSTM, BatchNormalization

from tensorflow.keras.callbacks import TensorBoard

from tensorflow.keras.callbacks import ModelCheckpoint, ModelCheckpoint

import time

from sklearn import preprocessing

SEQ_LEN = 60 # how long of a preceeding sequence to collect for RNN

FUTURE_PERIOD_PREDICT = 3 # how far into the future are we trying to predict?

RATIO_TO_PREDICT = "LTC-USD"

EPOCHS = 10 # how many passes through our data

BATCH_SIZE = 64 # how many batches? Try smaller batch if you're getting OOM (out of memory) errors.

NAME = f"{SEQ_LEN}-SEQ-{FUTURE_PERIOD_PREDICT}-PRED-{int(time.time())}"

def classify(current, future):

if float(future) > float(current): # if the future price is higher than the current, that's a buy, or a 1

return 1

else: # otherwise... it's a 0!

return 0

def preprocess_df(df):

df = df.drop("future", 1) # don't need this anymore.

for col in df.columns: # go through all of the columns

if col != "target": # normalize all ... except for the target itself!

df[col] = df[col].pct_change() # pct change "normalizes" the different currencies (each crypto coin has vastly diff values, we're really more interested in the other coin's movements)

df.dropna(inplace=True) # remove the nas created by pct_change

df[col] = preprocessing.scale(df[col].values) # scale between 0 and 1.

df.dropna(inplace=True) # cleanup again... jic.

sequential_data = [] # this is a list that will CONTAIN the sequences

prev_days = deque(maxlen=SEQ_LEN) # These will be our actual sequences. They are made with deque, which keeps the maximum length by popping out older values as new ones come in

for i in df.values: # iterate over the values

prev_days.append([n for n in i[:-1]]) # store all but the target

if len(prev_days) == SEQ_LEN: # make sure we have 60 sequences!

sequential_data.append([np.array(prev_days), i[-1]]) # append those bad boys!

random.shuffle(sequential_data) # shuffle for good measure.

buys = [] # list that will store our buy sequences and targets

sells = [] # list that will store our sell sequences and targets

for seq, target in sequential_data: # iterate over the sequential data

if target == 0: # if it's a "not buy"

sells.append([seq, target]) # append to sells list

elif target == 1: # otherwise if the target is a 1...

buys.append([seq, target]) # it's a buy!

random.shuffle(buys) # shuffle the buys

random.shuffle(sells) # shuffle the sells!

lower = min(len(buys), len(sells)) # what's the shorter length?

buys = buys[:lower] # make sure both lists are only up to the shortest length.

sells = sells[:lower] # make sure both lists are only up to the shortest length.

sequential_data = buys+sells # add them together

random.shuffle(sequential_data) # another shuffle, so the model doesn't get confused with all 1 class then the other.

X = []

y = []

for seq, target in sequential_data: # going over our new sequential data

X.append(seq) # X is the sequences

y.append(target) # y is the targets/labels (buys vs sell/notbuy)

return np.array(X), y # return X and y...and make X a numpy array!

main_df = pd.DataFrame() # begin empty

ratios = ["BTC-USD", "LTC-USD", "BCH-USD", "ETH-USD"] # the 4 ratios we want to consider

for ratio in ratios: # begin iteration

ratio = ratio.split('.csv')[0] # split away the ticker from the file-name

print(ratio)

dataset = f'training_datas/{ratio}.csv' # get the full path to the file.

df = pd.read_csv(dataset, names=['time', 'low', 'high', 'open', 'close', 'volume']) # read in specific file

# rename volume and close to include the ticker so we can still which close/volume is which:

df.rename(columns={"close": f"{ratio}_close", "volume": f"{ratio}_volume"}, inplace=True)

df.set_index("time", inplace=True) # set time as index so we can join them on this shared time

df = df[[f"{ratio}_close", f"{ratio}_volume"]] # ignore the other columns besides price and volume

if len(main_df)==0: # if the dataframe is empty

main_df = df # then it's just the current df

else: # otherwise, join this data to the main one

main_df = main_df.join(df)

main_df.fillna(method="ffill", inplace=True) # if there are gaps in data, use previously known values

main_df.dropna(inplace=True)

#print(main_df.head()) # how did we do??

main_df['future'] = main_df[f'{RATIO_TO_PREDICT}_close'].shift(-FUTURE_PERIOD_PREDICT)

main_df['target'] = list(map(classify, main_df[f'{RATIO_TO_PREDICT}_close'], main_df['future']))

main_df.dropna(inplace=True)

## here, split away some slice of the future data from the main main_df.

times = sorted(main_df.index.values)

last_5pct = sorted(main_df.index.values)[-int(0.05*len(times))]

validation_main_df = main_df[(main_df.index >= last_5pct)]

main_df = main_df[(main_df.index < last_5pct)]

train_x, train_y = preprocess_df(main_df)

validation_x, validation_y = preprocess_df(validation_main_df)

print(f"train data: {len(train_x)} validation: {len(validation_x)}")

print(f"Dont buys: {train_y.count(0)}, buys: {train_y.count(1)}")

print(f"VALIDATION Dont buys: {validation_y.count(0)}, buys: {validation_y.count(1)}")

model = Sequential()

model.add(CuDNNLSTM(128, input_shape=(train_x.shape[1:]), return_sequences=True))

model.add(Dropout(0.2))

model.add(BatchNormalization())

model.add(CuDNNLSTM(128, return_sequences=True))

model.add(Dropout(0.1))

model.add(BatchNormalization())

model.add(CuDNNLSTM(128))

model.add(Dropout(0.2))

model.add(BatchNormalization())

model.add(Dense(32, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(2, activation='softmax'))

opt = tf.keras.optimizers.Adam(lr=0.001, decay=1e-6)

# Compile model

model.compile(

loss='sparse_categorical_crossentropy',

optimizer=opt,

metrics=['accuracy']

)

tensorboard = TensorBoard(log_dir="logs/{}".format(NAME))

filepath = "RNN_Final-{epoch:02d}-{val_acc:.3f}" # unique file name that will include the epoch and the validation acc for that epoch

checkpoint = ModelCheckpoint("models/{}.model".format(filepath, monitor='val_acc', verbose=1, save_best_only=True, mode='max')) # saves only the best ones

# Train model

history = model.fit(

train_x, train_y,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

validation_data=(validation_x, validation_y),

callbacks=[tensorboard, checkpoint],

)

# Score model

score = model.evaluate(validation_x, validation_y, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

# Save model

model.save("models/{}".format(NAME))

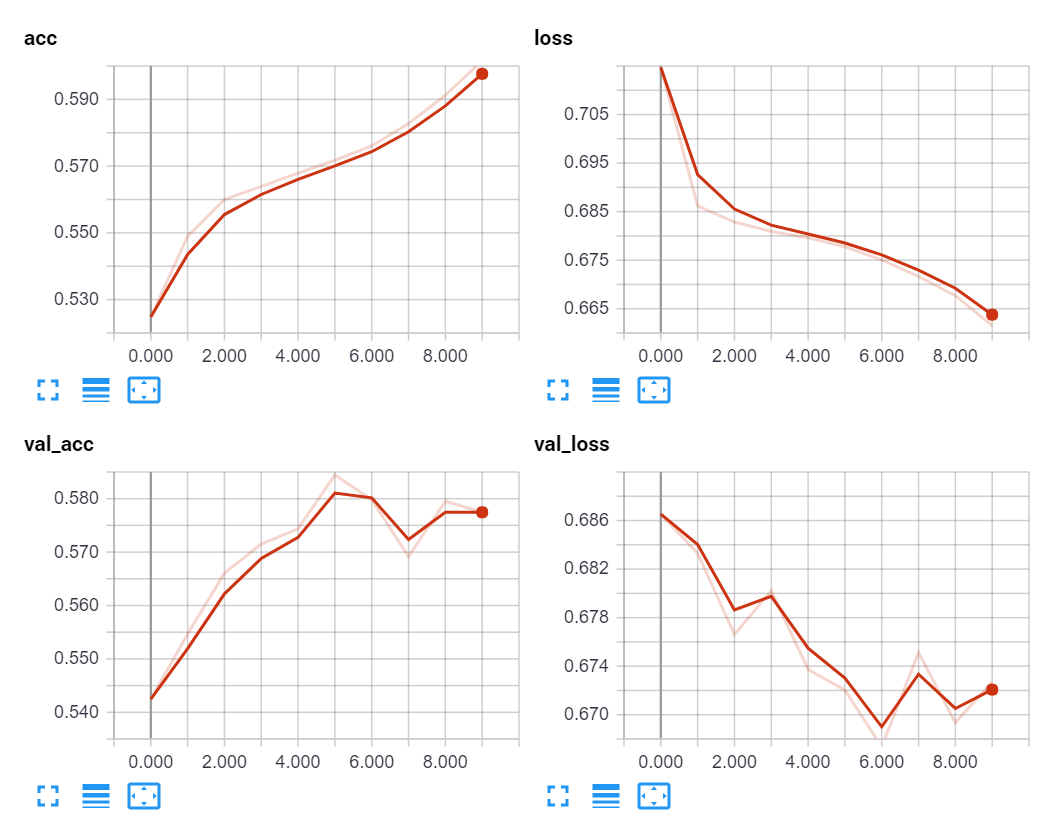

Not a bad start. Better than random, validation accuracy rises over time, validation loss drops.

Changing the name constant to include the ratio we're predicting:

NAME = f"{RATIO_TO_PREDICT}-{SEQ_LEN}-SEQ-{FUTURE_PERIOD_PREDICT}-PRED-{int(time.time())}"

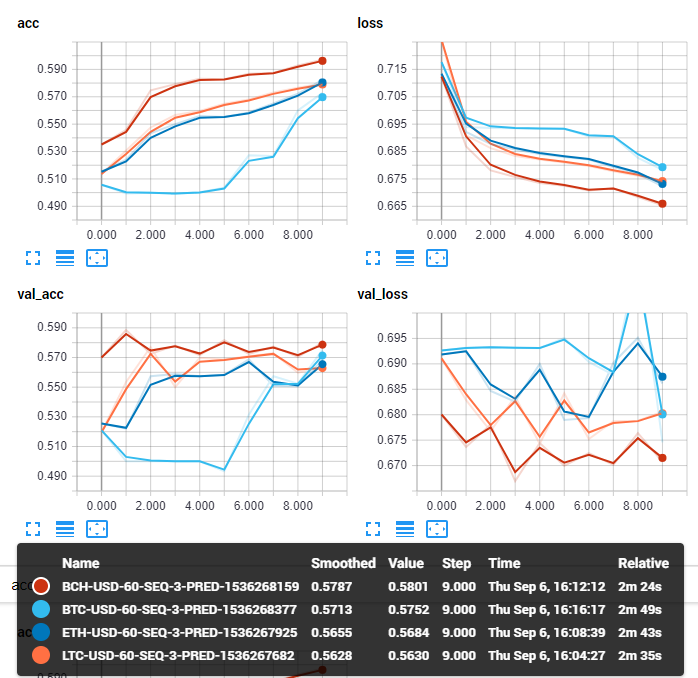

And then testing against all of the ratios:

Looks pretty good. Use at your own risk, there are probably bugs. Finance is hard. Historical results are not indicative of future results. This is for educational use only.

... you get the idea! That's all for this project.

-

Introduction to Deep Learning - Deep Learning basics with Python, TensorFlow and Keras p.1

-

Loading in your own data - Deep Learning basics with Python, TensorFlow and Keras p.2

-

Convolutional Neural Networks - Deep Learning basics with Python, TensorFlow and Keras p.3

-

Analyzing Models with TensorBoard - Deep Learning basics with Python, TensorFlow and Keras p.4

-

Optimizing Models with TensorBoard - Deep Learning basics with Python, TensorFlow and Keras p.5

-

How to use your trained model - Deep Learning basics with Python, TensorFlow and Keras p.6

-

Recurrent Neural Networks - Deep Learning basics with Python, TensorFlow and Keras p.7

-

Creating a Cryptocurrency-predicting finance recurrent neural network - Deep Learning basics with Python, TensorFlow and Keras p.8

-

Normalizing and creating sequences for our cryptocurrency predicting RNN - Deep Learning basics with Python, TensorFlow and Keras p.9

-

Balancing Recurrent Neural Network sequence data for our crypto predicting RNN - Deep Learning basics with Python, TensorFlow and Keras p.10

-

Cryptocurrency-predicting RNN Model - Deep Learning basics with Python, TensorFlow and Keras p.11