Deep Learning with SC2 Intro - Python AI in StarCraft II p.7

Hello and welcome to part 7 of our artificial intelligence with StarCraft II and Python programming tutorial series. In this part, and the next few parts, we will be considering the addition of deep learning, but, first, we have to decide what form of deep learning to use!

I believe the most applicable form of machine learning to apply in this case is an evolutionary application of deep learning. I know all the rage right now is Reinforcement Learning, specifically Q-learning, but I don't see where Q-learning is fundamentally going to work here better than other options, unless we dramatically simplify this challenge, or have hardware that vastly exceeds anything I could reasonably afford along with an extremely complex network. The idea of Q-learning is to distribute a reward or penalty across steps for a given pass through an environment. While we could work within slices of the StarCraft II environment to rectify this issue...why? Other than for clickbait, I just can't justify Q-learning here.

What's the plan with the evolutionary algorithm then? Evolutionary algorithms are similar to reinforcement learning algorithms, so much so that I would argue that they are a form of reinforcement learning algorithms. The main idea of reinforcement learning is to reinforce good choices, through an end target result. One of the major pitfalls of reinforcement learning is that people forget that they should be just simply reinforcing an end result, and nothing else, letting the algorithm figure out how to best get to that end result without human bias. What else reinforces some end result? Evolution does! What's the end result that is reinforced? Procreation.

With an evolutionary algorithm, in the case of StarCraft II, you allow the winning algorithm to be a part of the gene pool (training data), and the loser is forgotten.

What I propose we do first is simply figure out attacking, or at least try.

I do want to highlight that I really do not know the answer here. This is all trial and error for me now. Maybe Q-learning is the best choice, or maybe something else is, or some other form of structuring things than I will here. This is just going to be my journey through trying things and sharing it with you.

Our script from before:

import sc2

from sc2 import run_game, maps, Race, Difficulty

from sc2.player import Bot, Computer

from sc2.constants import NEXUS, PROBE, PYLON, ASSIMILATOR, GATEWAY, \

CYBERNETICSCORE, STALKER, STARGATE, VOIDRAY

import random

class SentdeBot(sc2.BotAI):

def __init__(self):

self.ITERATIONS_PER_MINUTE = 165

self.MAX_WORKERS = 50

async def on_step(self, iteration):

self.iteration = iteration

await self.distribute_workers()

await self.build_workers()

await self.build_pylons()

await self.build_assimilators()

await self.expand()

await self.offensive_force_buildings()

await self.build_offensive_force()

await self.attack()

async def build_workers(self):

if (len(self.units(NEXUS)) * 16) > len(self.units(PROBE)) and len(self.units(PROBE)) < self.MAX_WORKERS:

for nexus in self.units(NEXUS).ready.noqueue:

if self.can_afford(PROBE):

await self.do(nexus.train(PROBE))

async def build_pylons(self):

if self.supply_left < 5 and not self.already_pending(PYLON):

nexuses = self.units(NEXUS).ready

if nexuses.exists:

if self.can_afford(PYLON):

await self.build(PYLON, near=nexuses.first)

async def build_assimilators(self):

for nexus in self.units(NEXUS).ready:

vaspenes = self.state.vespene_geyser.closer_than(15.0, nexus)

for vaspene in vaspenes:

if not self.can_afford(ASSIMILATOR):

break

worker = self.select_build_worker(vaspene.position)

if worker is None:

break

if not self.units(ASSIMILATOR).closer_than(1.0, vaspene).exists:

await self.do(worker.build(ASSIMILATOR, vaspene))

async def expand(self):

if self.units(NEXUS).amount < (self.iteration / self.ITERATIONS_PER_MINUTE) and self.can_afford(NEXUS):

await self.expand_now()

async def offensive_force_buildings(self):

#print(self.iteration / self.ITERATIONS_PER_MINUTE)

if self.units(PYLON).ready.exists:

pylon = self.units(PYLON).ready.random

if self.units(GATEWAY).ready.exists and not self.units(CYBERNETICSCORE):

if self.can_afford(CYBERNETICSCORE) and not self.already_pending(CYBERNETICSCORE):

await self.build(CYBERNETICSCORE, near=pylon)

elif len(self.units(GATEWAY)) < ((self.iteration / self.ITERATIONS_PER_MINUTE)/2):

if self.can_afford(GATEWAY) and not self.already_pending(GATEWAY):

await self.build(GATEWAY, near=pylon)

if self.units(CYBERNETICSCORE).ready.exists:

if len(self.units(STARGATE)) < ((self.iteration / self.ITERATIONS_PER_MINUTE)/2):

if self.can_afford(STARGATE) and not self.already_pending(STARGATE):

await self.build(STARGATE, near=pylon)

async def build_offensive_force(self):

for gw in self.units(GATEWAY).ready.noqueue:

if not self.units(STALKER).amount > self.units(VOIDRAY).amount:

if self.can_afford(STALKER) and self.supply_left > 0:

await self.do(gw.train(STALKER))

for sg in self.units(STARGATE).ready.noqueue:

if self.can_afford(VOIDRAY) and self.supply_left > 0:

await self.do(sg.train(VOIDRAY))

def find_target(self, state):

if len(self.known_enemy_units) > 0:

return random.choice(self.known_enemy_units)

elif len(self.known_enemy_structures) > 0:

return random.choice(self.known_enemy_structures)

else:

return self.enemy_start_locations[0]

async def attack(self):

# {UNIT: [n to fight, n to defend]}

aggressive_units = {STALKER: [15, 5],

VOIDRAY: [8, 3]}

for UNIT in aggressive_units:

if self.units(UNIT).amount > aggressive_units[UNIT][0] and self.units(UNIT).amount > aggressive_units[UNIT][1]:

for s in self.units(UNIT).idle:

await self.do(s.attack(self.find_target(self.state)))

elif self.units(UNIT).amount > aggressive_units[UNIT][1]:

if len(self.known_enemy_units) > 0:

for s in self.units(UNIT).idle:

await self.do(s.attack(random.choice(self.known_enemy_units)))

run_game(maps.get("AbyssalReefLE"), [

Bot(Race.Protoss, SentdeBot()),

Computer(Race.Terran, Difficulty.Hard)

], realtime=False)

Let's instead make *only* Void Ray units, and then we'll modify the attacking protocol. First, let's modify the buildings method:

async def offensive_force_buildings(self):

#print(self.iteration / self.ITERATIONS_PER_MINUTE)

if self.units(PYLON).ready.exists:

pylon = self.units(PYLON).ready.random

if self.units(GATEWAY).ready.exists and not self.units(CYBERNETICSCORE):

if self.can_afford(CYBERNETICSCORE) and not self.already_pending(CYBERNETICSCORE):

await self.build(CYBERNETICSCORE, near=pylon)

elif len(self.units(GATEWAY)) < 1:

if self.can_afford(GATEWAY) and not self.already_pending(GATEWAY):

await self.build(GATEWAY, near=pylon)

if self.units(CYBERNETICSCORE).ready.exists:

if len(self.units(STARGATE)) < (self.iteration / self.ITERATIONS_PER_MINUTE): # this too

if self.can_afford(STARGATE) and not self.already_pending(STARGATE):

await self.build(STARGATE, near=pylon)

We no longer halve the Stargates and we only build a gateway if we don't have one yet.

Next, our offensive force method now becomes:

async def build_offensive_force(self):

for sg in self.units(STARGATE).ready.noqueue:

if self.can_afford(VOIDRAY) and self.supply_left > 0:

await self.do(sg.train(VOIDRAY))

Finally, our attack method becomes quite simple:

async def attack(self):

# {UNIT: [n to fight, n to defend]}

aggressive_units = {

#STALKER: [15, 5],

VOIDRAY: [8, 3],

}

for UNIT in aggressive_units:

for s in self.units(UNIT).idle:

await self.do(s.attack(self.find_target(self.state)))

We have some choices here. We can either attack/not attack as a whole, or we could do it on a per-unit basis. I think it would more interesting to do it on a per-unit basis, but more complex. Let's try to keep things simple for now and do it all together to start. Thus, as an army, we either all attack, or not.

Next, how do we make this decision? Well, in general, we'd like to build a bunch of logic for it, but, the idea of deep learning is to replace logic with a neural network! The challenge instead becomes: How do we inform the neural network? Well, in the case of this game, we have a lot of variables, and the amount of variables is...well...variable. We have a variable number of workers and a variable number of military units. We also have even a variable number of TYPES of military units that we might use!

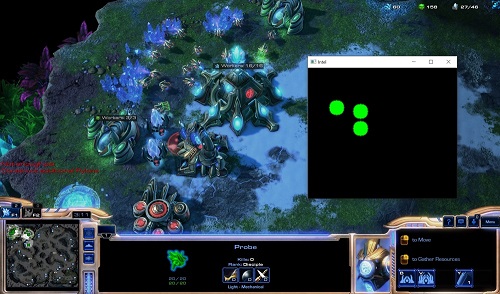

Immediately, I would consider visualizing this data, and passing that to the network. That said, it would also actually be useful to see the placement of our buildings, units, and the enemy buildings/units too. For this reason, I think it's wise to feed the neural network imagery, which then suggests that we use a convolutional neural network. Alright then, let's do that.

WELL, not so fast there Johnny. I know you just want to make the network and rule the world of StarCraft, but it's not so simple. We need data first. A lot of data. Mainly, these images tied to actions. Initially, we can just take random actions, and then save the winning actions, that's no big deal. But...we need that imagery. So, first, we have to put in some effort to build the imagery that we want to pass to the network.

It shouldn't be too challenging to do this, we have access to all the information we need, we just have to draw it. Let's draw our Nexuses for example.

To start, we need to import numpy and opencv. You may need to install these. If you're having trouble grabbing OpenCV (cv2), see installing OpenCV. If you still can't figure it out, pop into our Python Discord and someone can help you there.

import cv2 import numpy as np

Next, let's add to our on_step method, before running the attack method:

await self.intel()

We want to do this before the attack since the intel will inform the attack method most likely.

Now for our intel method:

We will use:

game_data = np.zeros((self.game_info.map_size[1], self.game_info.map_size[0], 3), np.uint8)

An array of an image translate to being height by width, the reverse of what we expect for an image, so we flip the axis values with the [0] and [1] elements.

To initially start with a blacked-out "screen" that is the size of the game map. Different maps have different sizes, but they're about 200x200.

Next, we can draw something like our Nexuses on the map with:

for nexus in self.units(NEXUS):

nex_pos = nexus.position

print(nex_pos)

cv2.circle(game_data, (int(nex_pos[0]), int(nex_pos[1])), 10, (0, 255, 0), -1) # BGR

Besides images being width and height, but arrays being height and width, OpenCV also starts 0, 0 as the top left. This is okay, except our coordinate system doesn't follow those rules. So then we need to flip the image:

flipped = cv2.flip(game_data, 0)

Then we resize it if we want to show it, just to make it easier to see. Other than that, we can visualize it with:

resized = cv2.resize(flipped, dsize=None, fx=2, fy=2)

cv2.imshow('Intel', resized)

cv2.waitKey(1)

The full intel method:

async def intel(self):

# for game_info: https://github.com/Dentosal/python-sc2/blob/master/sc2/game_info.py#L162

print(self.game_info.map_size)

# flip around. It's y, x when you're dealing with an array.

game_data = np.zeros((self.game_info.map_size[1], self.game_info.map_size[0], 3), np.uint8)

for nexus in self.units(NEXUS):

nex_pos = nexus.position

print(nex_pos)

cv2.circle(game_data, (int(nex_pos[0]), int(nex_pos[1])), 10, (0, 255, 0), -1) # BGR

# flip horizontally to make our final fix in visual representation:

flipped = cv2.flip(game_data, 0)

resized = cv2.resize(flipped, dsize=None, fx=2, fy=2)

cv2.imshow('Intel', resized)

cv2.waitKey(1)

Full code up to this point:

import sc2

from sc2 import run_game, maps, Race, Difficulty

from sc2.player import Bot, Computer

from sc2.constants import NEXUS, PROBE, PYLON, ASSIMILATOR, GATEWAY, \

CYBERNETICSCORE, STARGATE, VOIDRAY

import random

import cv2

import numpy as np

class SentdeBot(sc2.BotAI):

def __init__(self):

self.ITERATIONS_PER_MINUTE = 165

self.MAX_WORKERS = 50

async def on_step(self, iteration):

self.iteration = iteration

await self.distribute_workers()

await self.build_workers()

await self.build_pylons()

await self.build_assimilators()

await self.expand()

await self.offensive_force_buildings()

await self.build_offensive_force()

await self.intel()

await self.attack()

async def intel(self):

# for game_info: https://github.com/Dentosal/python-sc2/blob/master/sc2/game_info.py#L162

# print(self.game_info.map_size)

# flip around. It's y, x when you're dealing with an array.

game_data = np.zeros((self.game_info.map_size[1], self.game_info.map_size[0], 3), np.uint8)

for nexus in self.units(NEXUS):

nex_pos = nexus.position

print(nex_pos)

cv2.circle(game_data, (int(nex_pos[0]), int(nex_pos[1])), 10, (0, 255, 0), -1) # BGR

# flip horizontally to make our final fix in visual representation:

flipped = cv2.flip(game_data, 0)

resized = cv2.resize(flipped, dsize=None, fx=2, fy=2)

cv2.imshow('Intel', resized)

cv2.waitKey(1)

async def build_workers(self):

if (len(self.units(NEXUS)) * 16) > len(self.units(PROBE)) and len(self.units(PROBE)) < self.MAX_WORKERS:

for nexus in self.units(NEXUS).ready.noqueue:

if self.can_afford(PROBE):

await self.do(nexus.train(PROBE))

async def build_pylons(self):

if self.supply_left < 5 and not self.already_pending(PYLON):

nexuses = self.units(NEXUS).ready

if nexuses.exists:

if self.can_afford(PYLON):

await self.build(PYLON, near=nexuses.first)

async def build_assimilators(self):

for nexus in self.units(NEXUS).ready:

vaspenes = self.state.vespene_geyser.closer_than(15.0, nexus)

for vaspene in vaspenes:

if not self.can_afford(ASSIMILATOR):

break

worker = self.select_build_worker(vaspene.position)

if worker is None:

break

if not self.units(ASSIMILATOR).closer_than(1.0, vaspene).exists:

await self.do(worker.build(ASSIMILATOR, vaspene))

async def expand(self):

if self.units(NEXUS).amount < (self.iteration / self.ITERATIONS_PER_MINUTE) and self.can_afford(NEXUS):

await self.expand_now()

async def offensive_force_buildings(self):

if self.units(PYLON).ready.exists:

pylon = self.units(PYLON).ready.random

if self.units(GATEWAY).ready.exists and not self.units(CYBERNETICSCORE):

if self.can_afford(CYBERNETICSCORE) and not self.already_pending(CYBERNETICSCORE):

await self.build(CYBERNETICSCORE, near=pylon)

elif len(self.units(GATEWAY)) < 1:

if self.can_afford(GATEWAY) and not self.already_pending(GATEWAY):

await self.build(GATEWAY, near=pylon)

if self.units(CYBERNETICSCORE).ready.exists:

if len(self.units(STARGATE)) < (self.iteration / self.ITERATIONS_PER_MINUTE): # this too

if self.can_afford(STARGATE) and not self.already_pending(STARGATE):

await self.build(STARGATE, near=pylon)

async def build_offensive_force(self):

for sg in self.units(STARGATE).ready.noqueue:

if self.can_afford(VOIDRAY) and self.supply_left > 0:

await self.do(sg.train(VOIDRAY))

def find_target(self, state):

if len(self.known_enemy_units) > 0:

return random.choice(self.known_enemy_units)

elif len(self.known_enemy_structures) > 0:

return random.choice(self.known_enemy_structures)

else:

return self.enemy_start_locations[0]

async def attack(self):

# {UNIT: [n to fight, n to defend]}

aggressive_units = {

#STALKER: [15, 5],

VOIDRAY: [8, 3],

}

for UNIT in aggressive_units:

for s in self.units(UNIT).idle:

await self.do(s.attack(self.find_target(self.state)))

run_game(maps.get("AbyssalReefLE"), [

Bot(Race.Protoss, SentdeBot()),

Computer(Race.Terran, Difficulty.Hard)

], realtime=False)

You should now see something like:

-

Introduction and Collecting Minerals - Python AI in StarCraft II p.1

-

Workers and Pylons - Python AI in StarCraft II p.2

-

Geysers and Expanding - Python AI in StarCraft II p.3

-

Building an AI Army - Python AI in StarCraft II p.4

-

Commanding your AI Army - Python AI in StarCraft II p.5

-

Defeating Hard AI - Python AI in StarCraft II p.6

-

Deep Learning with SC2 Intro - Python AI in StarCraft II p.7

-

Scouting and more Visual inputs - Python AI in StarCraft II p.8

-

Building our training data - Python AI in StarCraft II p.9

-

Building Neural Network Model - Python AI in StarCraft II p.10

-

Training Neural Network Model - Python AI in StarCraft II p.11

-

Using Neural Network Model - Python AI in StarCraft II p.12

-

Version 2 Changes - Python AI in StarCraft II p.13

-

Improving Scouting - Python AI in StarCraft II p.14

-

Adding Choices - Python AI in StarCraft II p.15

-

Visualization Changes - Python AI in StarCraft II p.16

-

More Training and Findings - Python AI in StarCraft II p.17